The intent of this workshop is to educate users about the EKS Anywhere and its different use cases. As part of this workshop we also covering how to provision and manage EKS Anywhere clusters, run workloads and leverage observability tools like Prometheus and Grafana to monitor the EKS Anywhere cluster. We recommend this workshop for Cloud Architects, SREs, DevOps engineers, and other IT Professionals.

This is the multi-page printable view of this section. Click here to print.

Welcome to EKS Anywhere Workshop!

- 1: Introduction

- 1.1: Overview

- 1.2: Benefits & Use cases

- 1.3: Customer FAQ

- 2: Provisioning

- 2.1: Overview

- 2.2: Admin machine setup

- 2.3: Local cluster setup

- 2.4: Preparing needed for hosting EKS Anywhere on vSphere

- 2.5: vSphere cluster

- 3: Packages

- 3.1: Harbor use cases

1 - Introduction

The following topics are covered part of this chapter:

- EKS Anywhere service overview

- Benefits & service considerations

- Frequently asked questions (FAQs)

1.1 - Overview

What is the purpose of this workshop?

The purpose of this workshop is to provide a more perscriptive walkthrough of building, deploying, and operating an EKS Anywhere cluster. This will use existing content from the documentation, just in a more condensed format for those wishing to get started.

EKS Anywhere Overview

Amazon EKS Anywhere is a new deployment option for Amazon EKS that allows customers to create and operate Kubernetes clusters on customer-managed infrastructure, supported by AWS. Customers can now run Amazon EKS Anywhere on their own on-premises infrastructure using VMware vSphere starting today, with support for other deployment targets in the near future, including support for bare metal coming in 2022.

Amazon EKS Anywhere helps simplify the creation and operation of on-premises Kubernetes clusters with default component configurations while providing tools for automating cluster management. It builds on the strengths of Amazon EKS Distro: the same Kubernetes distribution that powers Amazon EKS on AWS. AWS supports all Amazon EKS Anywhere components including the integrated 3rd-party software, so that customers can reduce their support costs and avoid maintenance of redundant open-source and third-party tools. In addition, Amazon EKS Anywhere gives customers on-premises Kubernetes operational tooling that’s consistent with Amazon EKS. You can leverage the EKS console to view all of your Kubernetes clusters (including EKS Anywhere clusters) running anywhere, through the EKS Connector (public preview)

1.2 - Benefits & Use cases

Here are some key customer benefits of using Amazon EKS Anywhere:

- Simplify on-premises Kubernetes management - Amazon EKS Anywhere helps simplify the creation and operation of on-premises Kubernetes clusters with default component configurations while providing tools for automating cluster management.

- One stop support - AWS supports all Amazon EKS Anywhere components including the integrated 3rd-party software, so that customers can reduce their support costs and avoid maintenance of redundant open-source and third-party tools.

- Consistent and reliable - Amazon EKS Anywhere gives you on-premises Kubernetes operational tooling that’s consistent with Amazon EKS. It builds on the strengths of Amazon EKS Distro and provides open-source software that’s up-to-date and patched, so you can have a Kubernetes environment on-premises that is more reliable than self-managed Kubernetes offerings.

Use-cases supported by EKS Anywhere

EKS Anywhere is suitable for the following use-cases:

- Hybrid cloud consistency - You may have lots of Kubernetes workloads on Amazon EKS but also need to operate Kubernetes clusters on-premises. Amazon EKS Anywhere offers strong operational consistency with Amazon EKS so you can standardize your Kubernetes operations based on a unified toolset.

- Disconnected environment - You may need to secure your applications in disconnected environment or run applications in areas without internet connectivity. Amazon EKS Anywhere allows you to deploy and operate highly-available clusters with the same Kubernetes distribution that powers Amazon EKS on AWS.

- Application modernization - Amazon EKS Anywhere empowers you to modernize your on-premises applications, removing the heavy lifting of keeping up with upstream Kubernetes and security patches, so you can focus on your core business value.

- Data sovereignty - You may want to keep your large data sets on-premises due to legal requirements concerning the location of the data. Amazon EKS Anywhere brings the trusted Amazon EKS Kubernetes distribution and tools to where your data needs to be.

1.3 - Customer FAQ

AuthN / AuthZ

How do my applications running on EKS Anywhere authenticate with AWS services using IAM credentials?

You can now leverage the IAM Role for Service Account (IRSA)

feature by following the IRSA reference

guide for details.

Does EKS Anywhere support OIDC (including Azure AD and AD FS)?

Yes, EKS Anywhere can create clusters that support API server OIDC authentication. This means you can federate authentication through AD FS locally or through Azure AD, along with other IDPs that support the OIDC standard. In order to add OIDC support to your EKS Anywhere clusters, you need to configure your cluster by updating the configuration file before creating the cluster. Please see the OIDC reference

for details.

Does EKS Anywhere support LDAP?

EKS Anywhere does not support LDAP out of the box. However, you can look into the Dex LDAP Connector

.

Can I use AWS IAM for Kubernetes resource access control on EKS Anywhere?

Yes, you can install the aws-iam-authenticator

on your EKS Anywhere cluster to achieve this.

Miscellaneous

Can I connect my EKS Anywhere cluster to EKS?

Yes, you can install EKS Connector to connect your EKS Anywhere cluster to AWS EKS. EKS Connector is a software agent that you can install on the EKS Anywhere cluster that enables the cluster to communicate back to AWS. Once connected, you can immediately see the EKS Anywhere cluster with workload and cluster configuration information on the EKS console, alongside your EKS clusters.

How does the EKS Connector authenticate with AWS?

During start-up, the EKS Connector generates and stores an RSA key-pair as Kubernetes secrets. It also registers with AWS using the public key and the activation details from the cluster registration configuration file. The EKS Connector needs AWS credentials to receive commands from AWS and to send the response back. Whenever it requires AWS credentials, it uses its private key to sign the request and invokes AWS APIs to request the credentials.

How does the EKS Connector authenticate with my Kubernetes cluster?

The EKS Connector acts as a proxy and forwards the EKS console requests to the Kubernetes API server on your cluster. In the initial release, the connector uses impersonation

with its service account secrets to interact with the API server. Therefore, you need to associate the connector’s service account with a ClusterRole, which gives permission to impersonate AWS IAM entities.

How do I enable an AWS user account to view my connected cluster through the EKS console?

For each AWS user or other IAM identity, you should add cluster role binding to the Kubernetes cluster with the appropriate permission for that IAM identity. Additionally, each of these IAM entities should be associated with the IAM policy

to invoke the EKS Connector on the cluster.

Can I use Amazon Controllers for Kubernetes (ACK) on EKS Anywhere?

Yes, you can leverage AWS services from your EKS Anywhere clusters on-premises through Amazon Controllers for Kubernetes (ACK)

.

Can I deploy EKS Anywhere on other clouds?

EKS Anywhere can be installed on any infrastructure with the required VMware vSphere versions. See EKS Anywhere vSphere prerequisite

documentation.

How can I manage EKS Anywhere at scale?

You can perform cluster life cycle and configuration management at scale through GitOps-based tools. EKS Anywhere offers git-driven cluster management through the integrated Flux Controller. See Manage cluster with GitOps

documentation for details.

Can I run EKS Anywhere on ESXi?

No. EKS Anywhere is dependent on the vSphere cluster API provider CAPV and it uses the vCenter API. There would need to be a change to the upstream project to support ESXi.

2 - Provisioning

This chapter walks through the following:

- Overview of provisioning

- Prerequisites for creating an EKS Anywhere cluster

- Provisioning a new EKS Anywhere cluster

- Verifying the cluster installation

2.1 - Overview

EKS Anywhere uses the eksctl executable to create a Kubernetes cluster in your environment.

Currently it allows you to create and delete clusters in a vSphere environment.

You can run cluster create and delete commands from an Ubuntu or Mac administrative machine.

To create a cluster, you need to create a specification file that includes all of your vSphere details and information about your EKS Anywhere cluster.

Running the eksctl anywhere create cluster command from your admin machine creates the workload cluster in vSphere.

It does this by first creating a temporary bootstrap cluster to direct the workload cluster creation.

Once the workload cluster is created, the cluster management resources are moved to your workload cluster and the local bootstrap cluster is deleted.

Once your workload cluster is created, a KUBECONFIG file is stored on your admin machine with RBAC admin permissions for the workload cluster.

You’ll be able to use that file with kubectl to set up and deploy workloads.

For a detailed description, see Cluster creation workflow

. Here’s a diagram that explains the process visually.

EKS Anywhere Create Cluster

Next steps:

2.2 - Admin machine setup

EKS Anywhere will create and manage Kubernetes clusters on multiple providers. Currently we support creating development clusters locally with Docker and production clusters using VMware vSphere. Other deployment targets will be added in the future, including bare metal support in 2022.

Creating an EKS Anywhere cluster begins with setting up an Administrative machine where you will run Docker and add some binaries. From there, you create the cluster for your chosen provider. See Create cluster workflow

for an overview of the cluster creation process.

To create an EKS Anywhere cluster you will need eksctl

and the eksctl-anywhere plugin.

This will let you create a cluster in multiple providers for local development or production workloads.

Administrative machine prerequisites

-

Docker 20.x.x

-

Mac OS (10.15) / Ubuntu (20.04.2 LTS)

-

4 CPU cores

-

16GB memory

-

30GB free disk space

Note

<ul>- If you are using Ubuntu use the Docker CE

installation instructions to install Docker and not the Snap installation.

- If you are using Mac OS Docker Desktop 4.4.2 or newer

"deprecatedCgroupv1": truemust be set in~/Library/Group\ Containers/group.com.docker/settings.json.- Currently newer versions of Ubuntu (21.10) and other linux distributions with cgroup v2 enabled are not supported.

- If you are using Ubuntu use the Docker CE

Install EKS Anywhere CLI tools

Via Homebrew (macOS and Linux)

Warning

EKS Anywhere only works on computers with x86 and amd64 process architecture.

It currently will not work on computers with Apple Silicon or Arm based processors.

You can install eksctl and eksctl-anywhere with homebrew

.

This package will also install kubectl and the aws-iam-authenticator which will be helpful to test EKS Anywhere clusters.

brew install aws/tap/eks-anywhere

Manually (macOS and Linux)

Install the latest release of eksctl.

The EKS Anywhere plugin requires eksctl version 0.66.0 or newer.

curl "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" \

--silent --location \

| tar xz -C /tmp

sudo mv /tmp/eksctl /usr/local/bin/

Install the eksctl-anywhere plugin.

export EKSA_RELEASE="0.9.1" OS="$(uname -s | tr A-Z a-z)" RELEASE_NUMBER=12

curl "https://anywhere-assets.eks.amazonaws.com/releases/eks-a/${RELEASE_NUMBER}/artifacts/eks-a/v${EKSA_RELEASE}/${OS}/amd64/eksctl-anywhere-v${EKSA_RELEASE}-${OS}-amd64.tar.gz" \

--silent --location \

| tar xz ./eksctl-anywhere

sudo mv ./eksctl-anywhere /usr/local/bin/

Upgrade eksctl-anywhere

If you installed eksctl-anywhere via homebrew you can upgrade the binary with

brew update

brew upgrade eks-anywhere

If you installed eksctl-anywhere manually you should follow the installation steps to download the latest release.

You can verify your installed version with

eksctl anywhere version

Deploy a cluster

Once you have the tools installed you can deploy a local cluster or production cluster in the next steps.

2.3 - Local cluster setup

EKS Anywhere docker provider deployments

EKS Anywhere supports a Docker provider for development and testing use cases only. This allows you to try EKS Anywhere on your local system before deploying to a supported provider.

To install the EKS Anywhere binaries and see system requirements please follow the installation guide

.

Steps

-

Generate a cluster config

CLUSTER_NAME=dev-cluster eksctl anywhere generate clusterconfig $CLUSTER_NAME \ --provider docker > $CLUSTER_NAME.yamlThe command above creates a file named eksa-cluster.yaml with the contents below in the path where it is executed. The configuration specification is divided into two sections:

- Cluster

- DockerDatacenterConfig

apiVersion: anywhere.eks.amazonaws.com/v1alpha1 kind: Cluster metadata: name: dev-cluster spec: clusterNetwork: cniConfig: cilium: {} pods: cidrBlocks: - 192.168.0.0/16 services: cidrBlocks: - 10.96.0.0/12 controlPlaneConfiguration: count: 1 datacenterRef: kind: DockerDatacenterConfig name: dev-cluster externalEtcdConfiguration: count: 1 kubernetesVersion: "1.21" managementCluster: name: dev-cluster workerNodeGroupConfigurations: - count: 1 name: md-0 --- apiVersion: anywhere.eks.amazonaws.com/v1alpha1 kind: DockerDatacenterConfig metadata: name: dev-cluster spec: {} -

Create Cluster: Create your cluster either with or without curated packages:

-

Cluster creation without curated packages installation

eksctl anywhere create cluster -f $CLUSTER_NAME.yamlExample command output

Performing setup and validations ✅ validation succeeded {"validation": "docker Provider setup is valid"} Creating new bootstrap cluster Installing cluster-api providers on bootstrap cluster Provider specific setup Creating new workload cluster Installing networking on workload cluster Installing cluster-api providers on workload cluster Moving cluster management from bootstrap to workload cluster Installing EKS-A custom components (CRD and controller) on workload cluster Creating EKS-A CRDs instances on workload cluster Installing AddonManager and GitOps Toolkit on workload cluster GitOps field not specified, bootstrap flux skipped Deleting bootstrap cluster 🎉 Cluster created! -

Cluster creation with optional curated packages

Note

<ul>- It is optional to install curated packages as part of the cluster creation.

eksctl anywhere versionversion should be later thanv0.9.0.- If including curated packages during cluster creation, please set the environment variable:

export CURATED_PACKAGES_SUPPORT=true- Post-creation installation and detailed package configurations can be found here.

-

Discover curated-packages to install

eksctl anywhere list packages --source registry --kube-version 1.21Example command output

Package Version(s) ------- ---------- harbor 2.5.0-4324383d8c5383bded5f7378efb98b4d50af827b -

Generate a curated-packages config

The example shows how to install the

harborpackage from the curated package list.

eksctl anywhere generate package harbor --source registry --kube-version 1.21 > packages.yaml -

Create a cluster

# Create a cluster with curated packages installation eksctl anywhere create cluster -f $CLUSTER_NAME.yaml --install-packages packages.yamlExample command output

Performing setup and validations ✅ validation succeeded {"validation": "docker Provider setup is valid"} Creating new bootstrap cluster Installing cluster-api providers on bootstrap cluster Provider specific setup Creating new workload cluster Installing networking on workload cluster Installing cluster-api providers on workload cluster Moving cluster management from bootstrap to workload cluster Installing EKS-A custom components (CRD and controller) on workload cluster Creating EKS-A CRDs instances on workload cluster Installing AddonManager and GitOps Toolkit on workload cluster GitOps field not specified, bootstrap flux skipped Deleting bootstrap cluster 🎉 Cluster created! ---------------------------------------------------------------------------------------------------------------- The EKS Anywhere package controller and the EKS Anywhere Curated Packages (referred to as “features”) are provided as “preview features” subject to the AWS Service Terms, (including Section 2 (Betas and Previews)) of the same. During the EKS Anywhere Curated Packages Public Preview, the AWS Service Terms are extended to provide customers access to these features free of charge. These features will be subject to a service charge and fee structure at ”General Availability“ of the features. ---------------------------------------------------------------------------------------------------------------- Installing curated packages controller on workload cluster package.packages.eks.amazonaws.com/my-harbor created

-

-

Use the cluster

Once the cluster is created you can use it with the generated

KUBECONFIGfile in your local directoryexport KUBECONFIG=${PWD}/${CLUSTER_NAME}/${CLUSTER_NAME}-eks-a-cluster.kubeconfig kubectl get nsExample command output

NAME STATUS AGE capd-system Active 21m capi-kubeadm-bootstrap-system Active 21m capi-kubeadm-control-plane-system Active 21m capi-system Active 21m capi-webhook-system Active 21m cert-manager Active 22m default Active 23m eksa-system Active 20m kube-node-lease Active 23m kube-public Active 23m kube-system Active 23mYou can now use the cluster like you would any Kubernetes cluster. Deploy the test application with:

kubectl apply -f "https://anywhere.eks.amazonaws.com/manifests/hello-eks-a.yaml"Verify the test application in the deploy test application section

.

Next steps:

-

See the Cluster management

section for more information on common operational tasks like scaling and deleting the cluster.

-

See the Package management

section for more information on post-creation curated packages installation.

To verify that a cluster control plane is up and running, use the kubectl command to show that the control plane pods are all running.

kubectl get po -A -l control-plane=controller-manager

NAMESPACE NAME READY STATUS RESTARTS AGE

capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-57b99f579f-sd85g 2/2 Running 0 47m

capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-79cdf98fb8-ll498 2/2 Running 0 47m

capi-system capi-controller-manager-59f4547955-2ks8t 2/2 Running 0 47m

capi-webhook-system capi-controller-manager-bb4dc9878-2j8mg 2/2 Running 0 47m

capi-webhook-system capi-kubeadm-bootstrap-controller-manager-6b4cb6f656-qfppd 2/2 Running 0 47m

capi-webhook-system capi-kubeadm-control-plane-controller-manager-bf7878ffc-rgsm8 2/2 Running 0 47m

capi-webhook-system capv-controller-manager-5668dbcd5-v5szb 2/2 Running 0 47m

capv-system capv-controller-manager-584886b7bd-f66hs 2/2 Running 0 47m

You may also check the status of the cluster control plane resource directly. This can be especially useful to verify clusters with multiple control plane nodes after an upgrade.

kubectl get kubeadmcontrolplanes.controlplane.cluster.x-k8s.io

NAME INITIALIZED API SERVER AVAILABLE VERSION REPLICAS READY UPDATED UNAVAILABLE

supportbundletestcluster true true v1.20.7-eks-1-20-6 1 1 1

To verify that the expected number of cluster worker nodes are up and running, use the kubectl command to show that nodes are Ready.

This will confirm that the expected number of worker nodes are present.

Worker nodes are named using the cluster name followed by the worker node group name (example: my-cluster-md-0)

kubectl get nodes

NAME STATUS ROLES AGE VERSION

supportbundletestcluster-md-0-55bb5ccd-mrcf9 Ready <none> 4m v1.20.7-eks-1-20-6

supportbundletestcluster-md-0-55bb5ccd-zrh97 Ready <none> 4m v1.20.7-eks-1-20-6

supportbundletestcluster-mdrwf Ready control-plane,master 5m v1.20.7-eks-1-20-6

To test a workload in your cluster you can try deploying the hello-eks-anywhere

.

2.4 - Preparing needed for hosting EKS Anywhere on vSphere

Create a VM and template folder (Optional):

For each user that needs to create workload clusters, have the vSphere administrator create a VM and template folder. That folder will host:

- The VMs of the Control plane and Data plane nodes of each cluster.

- A nested folder for the management cluster and another one for each workload cluster.

- Each cluster VM in its own nested folder under this folder.

User permissions should be set up to:

- Only allow the user to see and create EKS Anywhere resources in that folder and its nested folders.

- Prevent the user from having visibility and control over the whole vSphere cluster domain and its sub-child objects (datacenter, resource pools and other folders).

In your EKS Anywhere configuration file you will reference to a path under this folder associated with the cluster you create.

Add a vSphere folder

Follow these steps to create the user’s vSphere folder:

- From vCenter, select the Menus/VM and Template tab.

- Select either a datacenter or another folder as a parent object for the folder that you want to create.

- Right-click the parent object and click New Folder.

- Enter a name for the folder and click OK.

For more details, see the vSphere Create a Folder

documentation.

Set up vSphere roles and user permission

You need to get a vSphere username with the right privileges to let you creatie EKS Anywhere clusters on top of your vSphere cluster. Then you would need to import the latest release of the EKS Anywhere OVA template to your VSphere cluster to use it to provision your Cluster nodes.

Add a vCenter User

Ask your VSphere administrator to add a vCenter user that will be used for the provisioning of the EKS Anywhere cluster in VMware vSphere.

- Log in with the vSphere Client to the vCenter Server.

- Specify the user name and password for a member of the vCenter Single Sign-On Administrators group.

- Navigate to the vCenter Single Sign-On user configuration UI.

- From the Home menu, select Administration.

- Under Single Sign On, click Users and Groups.

- If vsphere.local is not the currently selected domain, select it from the drop-down menu. You cannot add users to other domains.

- On the Users tab, click Add.

- Enter a user name and password for the new user.

- The maximum number of characters allowed for the user name is 300.

- You cannot change the user name after you create a user. The password must meet the password policy requirements for the system.

- Click Add.

For more details, see vSphere Add vCenter Single Sign-On Users

documentation.

Create and define user roles

When you add a user for creating clusters, that user initially has no privileges to perform management operations. So you have to add this user to groups with the required permissions, or assign a role or roles with the required permission to this user.

Three roles are needed to be able to create the EKS Anywhere cluster:

-

Create a global custom role: For example, you could name this EKS Anywhere Global. Define it for the user on the vCenter domain level and its children objects. Create this role with the following privileges:

> Content Library * Add library item * Check in a template * Check out a template * Create local library > vSphere Tagging * Assign or Unassign vSphere Tag * Assign or Unassign vSphere Tag on Object * Create vSphere Tag * Create vSphere Tag Category * Delete vSphere Tag * Delete vSphere Tag Category * Edit vSphere Tag * Edit vSphere Tag Category * Modify UsedBy Field For Category * Modify UsedBy Field For Tag -

Create a user custom role: The second role is also a custom role that you could call, for example, EKS Anywhere User. Define this role with the following objects and children objects.

- The pool resource level and its children objects. This resource pool that our EKS Anywhere VMs will be part of.

- The storage object level and its children objects. This storage that will be used to store the cluster VMs.

- The network VLAN object level and its children objects. This network that will host the cluster VMs.

- The VM and Template folder level and its children objects.

Create this role with the following privileges:

> Content Library * Add library item * Check in a template * Check out a template * Create local library > Datastore * Allocate space * Browse datastore * Low level file operations > Folder * Create folder > vSphere Tagging * Assign or Unassign vSphere Tag * Assign or Unassign vSphere Tag on Object * Create vSphere Tag * Create vSphere Tag Category * Delete vSphere Tag * Delete vSphere Tag Category * Edit vSphere Tag * Edit vSphere Tag Category * Modify UsedBy Field For Category * Modify UsedBy Field For Tag > Network * Assign network > Resource * Assign virtual machine to resource pool > Scheduled task * Create tasks * Modify task * Remove task * Run task > Profile-driven storage * Profile-driven storage view > Storage views * View > vApp * Import > Virtual machine * Change Configuration - Add existing disk - Add new disk - Add or remove device - Advanced configuration - Change CPU count - Change Memory - Change Settings - Configure Raw device - Extend virtual disk - Modify device settings - Remove disk * Edit Inventory - Create from existing - Create new - Remove * Interaction - Power off - Power on * Provisioning - Clone template - Clone virtual machine - Create template from virtual machine - Customize guest - Deploy template - Mark as template - Read customization specifications * Snapshot management - Create snapshot - Remove snapshot - Revert to snapshot -

Create a default Administrator role: The third role is the default system role Administrator that you define to the user on the folder level and its children objects (VMs and OVA templates) that was created by the VSphere admistrator for you.

To create a role and define privileges check Create a vCenter Server Custom Role

pages.

Deploy an OVA Template

If the user creating the cluster has permission and network access to create and tag a template, you can skip these steps because EKS Anywhere will automatically download the OVA and create the template if it can. If the user does not have the permissions or network access to create and tag the template, follow this guide. The OVA contains the operating system (Ubuntu or Bottlerocket) for a specific EKS-D Kubernetes release and EKS-A version. The following example uses Ubuntu as the operating system, but a similar workflow would work for Bottlerocket.

Steps to deploy the Ubuntu OVA

- Go to the artifacts

page and download the OVA template with the newest EKS-D Kubernetes release to your computer.

- Log in to the vCenter Server.

- Right-click the folder you created above and select Deploy OVF Template. The Deploy OVF Template wizard opens.

- On the Select an OVF template page, select the Local file option, specify the location of the OVA template you downloaded to your computer, and click Next.

- On the Select a name and folder page, enter a unique name for the virtual machine or leave the default generated name, if you do not have other templates with the same name within your vCenter Server virtual machine folder. The default deployment location for the virtual machine is the inventory object where you started the wizard, which is the folder you created above. Click Next.

- On the Select a compute resource page, select the resource pool where to run the deployed VM template, and click Next.

- On the Review details page, verify the OVF or OVA template details and click Next.

- On the Select storage page, select a datastore to store the deployed OVF or OVA template and click Next.

- On the Select networks page, select a source network and map it to a destination network. Click Next.

- On the Ready to complete page, review the page and click Finish. For details, see Deploy an OVF or OVA Template

To build your own Ubuntu OVA template check the Building your own Ubuntu OVA section in the following link

.

To use the deployed OVA template to create the VMs for the EKS Anywhere cluster, you have to tag it with specific values for the os and eksdRelease keys.

The value of the os key is the operating system of the deployed OVA template, which is ubuntu in our scenario.

The value of the eksdRelease holds kubernetes and the EKS-D release used in the deployed OVA template.

Check the following Customize OVAs

page for more details.

Steps to tag the deployed OVA template:

- Go to the artifacts

page and take notes of the tags and values associated with the OVA template you deployed in the previous step.

- In the vSphere Client, select Menu > Tags & Custom Attributes.

- Select the Tags tab and click Tags.

- Click New.

- In the Create Tag dialog box, copy the

ostag name associated with your OVA that you took notes of, which in our case isos:ubuntuand paste it as the name for the first tag required. - Specify the tag category

osif it exist or create it if it does not exist. - Click Create.

- Repeat steps 2-4.

- In the Create Tag dialog box, copy the

ostag name associated with your OVA that you took notes of, which in our case iseksdRelease:kubernetes-1-21-eks-8and paste it as the name for the second tag required. - Specify the tag category

eksdReleaseif it exist or create it if it does not exist. - Click Create.

- Navigate to the VM and Template tab.

- Select the folder that was created.

- Select deployed template and click Actions.

- From the drop-down menu, select Tags and Custom Attributes > Assign Tag.

- Select the tags we created from the list and confirm the operation.

To run EKS Anywhere, you will need:

Prepare Administrative machine

Set up an Administrative machine as described in Install EKS Anywhere

.

Prepare a VMware vSphere environment

To prepare a VMware vSphere environment to run EKS Anywhere, you need the following:

-

A vSphere 7+ environment running vCenter

-

Capacity to deploy 6-10 VMs

-

running in vSphere environment in the primary VM network for your workload cluster

-

One network in vSphere to use for the cluster. This network must have inbound access into vCenter

-

An OVA

imported into vSphere and converted into a template for the workload VMs

-

User credentials to create VMs and attach networks, etc

-

One IP address routable from cluster but excluded from DHCP offering. This IP address is to be used as the Control Plane Endpoint IP or kube-vip VIP address

Below are some suggestions to ensure that this IP address is never handed out by your DHCP server.

You may need to contact your network engineer.

- Pick an IP address reachable from cluster subnet which is excluded from DHCP range OR

- Alter DHCP ranges to leave out an IP address(s) at the top and/or the bottom of the range OR

- Create an IP reservation for this IP on your DHCP server. This is usually accomplished by adding a dummy mapping of this IP address to a non-existent mac address.

Each VM will require:

- 2 vCPUs

- 8GB RAM

- 25GB Disk

The administrative machine and the target workload environment will need network access to:

- public.ecr.aws

- anywhere-assets.eks.amazonaws.com (to download the EKS Anywhere binaries, manifests and OVAs)

- distro.eks.amazonaws.com (to download EKS Distro binaries and manifests)

- d2glxqk2uabbnd.cloudfront.net (for EKS Anywhere and EKS Distro ECR container images)

- api.github.com (only if GitOps is enabled)

vSphere information needed before creating the cluster

You need to get the following information before creating the cluster:

-

Static IP Addresses: You will need one IP address for the management cluster control plane endpoint, and a separate one for the controlplane of each workload cluster you add.

Let’s say you are going to have the management cluster and two workload clusters. For those, you would need three IP addresses, one for each. All of those addresses will be configured the same way in the configuration file you will generate for each cluster.

A static IP address will be used for each control plane VM in your EKS Anywhere cluster. Choose IP addresses in your network range that do not conflict with other VMs and make sure they are excluded from your DHCP offering.

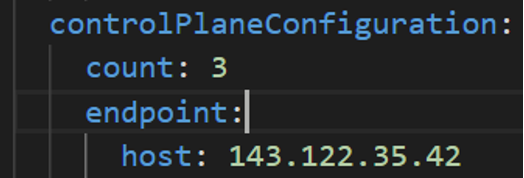

An IP address will be the value of the property

controlPlaneConfiguration.endpoint.hostin the config file of the management cluster. A separate IP address must be assigned for each workload cluster.

-

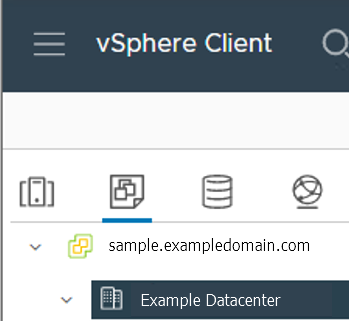

vSphere Datacenter Name: The vSphere datacenter to deploy the EKS Anywhere cluster on.

-

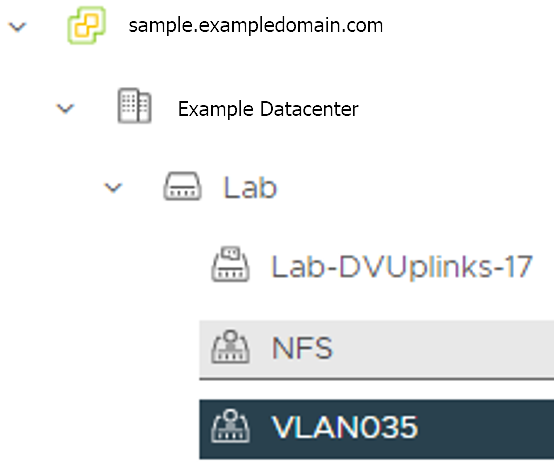

VM Network Name: The VM network to deploy your EKS Anywhere cluster on.

-

vCenter Server Domain Name: The vCenter server fully qualified domain name or IP address. If the server IP is used, the thumbprint must be set or insecure must be set to true.

-

thumbprint (required if insecure=false): The SHA1 thumbprint of the vCenter server certificate which is only required if you have a self-signed certificate for your vSphere endpoint.

There are several ways to obtain your vCenter thumbprint. If you have govc installed

, you can run the following command in the Administrative machine terminal, and take a note of the output:

govc about.cert -thumbprint -k -

template: The VM template to use for your EKS Anywhere cluster. This template was created when you imported the OVA file into vSphere.

-

datastore: The vSphere datastore

to deploy your EKS Anywhere cluster on.

-

folder: The folder parameter in VSphereMachineConfig allows you to organize the VMs of an EKS Anywhere cluster. With this, each cluster can be organized as a folder in vSphere. You will have a separate folder for the management cluster and each cluster you are adding.

-

resourcePool: The vSphere Resource pools for your VMs in the EKS Anywhere cluster. If there is a resource pool:

/<datacenter>/host/<resource-pool-name>/Resources

2.5 - vSphere cluster

EKS Anywhere supports a vSphere provider for production grade EKS Anywhere deployments. EKS Anywhere allows you to provision and manage Amazon EKS on your own infrastructure.

This document walks you through setting up EKS Anywhere in a way that:

- Deploys an initial cluster on your vSphere environment. That cluster can be used as a self-managed cluster (to run workloads) or a management cluster (to create and manage other clusters)

- Deploys zero or more workload clusters from the management cluster

If your initial cluster is a management cluster, it is intended to stay in place so you can use it later to modify, upgrade, and delete workload clusters. Using a management cluster makes it faster to provision and delete workload clusters. Also it lets you keep vSphere credentials for a set of clusters in one place: on the management cluster. The alternative is to simply use your initial cluster to run workloads.

Important

Creating an EKS Anywhere management cluster is the recommended model. Separating management features into a separate, persistent management cluster provides a cleaner model for managing the lifecycle of workload clusters (to create, upgrade, and delete clusters), while workload clusters run user applications. This approach also reduces provider permissions for workload clusters.Prerequisite Checklist

EKS Anywhere needs to be run on an administrative machine that has certain machine requirements . An EKS Anywhere deployment will also require the availability of certain resources from your VMware vSphere deployment .

Steps

The following steps are divided into two sections:

- Create an initial cluster (used as a management or self-managed cluster)

- Create zero or more workload clusters from the management cluster

Create an initial cluster

Follow these steps to create an EKS Anywhere cluster that can be used either as a management cluster or as a self-managed cluster (for running workloads itself).

All steps listed below should be executed on the admin machine with reachability to the vSphere environment where the EKA Anywhere clusters are created.

-

Generate an initial cluster config (named

mgmt-clusterfor this example):export MGMT_CLUSTER_NAME=mgmt-cluster eksctl anywhere generate clusterconfig $MGMT_CLUSTER_NAME \ --provider vsphere > $MGMT_CLUSTER_NAME.yamlThe command above creates a config file named mgmt-cluster.yaml in the path where it is executed. Refer to vsphere configuration for information on configuring this cluster config for a vSphere provider.

The configuration specification is divided into three sections:

- Cluster

- VSphereDatacenterConfig

- VSphereMachineConfig

Some key considerations and configuration parameters:

-

Create at least two control plane nodes, three worker nodes, and three etcd nodes for a production cluster, to provide high availability and rolling upgrades.

-

osFamily (operating System on virtual machines) parameter in VSphereMachineConfig by default is set to bottlerocket. Permitted values: ubuntu, bottlerocket.

-

The recommended mode of deploying etcd on EKS Anywhere production clusters is unstacked (etcd members have dedicated machines and are not collocated with control plane components). More information here. The generated config file comes with external etcd enabled already. So leave this part as it is.

-

Apart from the base configuration, you can optionally add additional configuration to enable supported EKS Anywhere functionalities.

- OIDC

- etcd (comes by default with the generated config file)

- proxy

- GitOps

- IAM for Pods

- IAM Authenticator

- container registry mirror

As of now, you have to pre-determine which features you want to enable on your cluster before cluster creation. Otherwise, to enable them post-creation will require you to delete and recreate the cluster. However, the next EKS-A release will remove such limitation.

-

To enable managing cluster resources using GitOps, you would need to enable GitOps configurations on the initial/managemet cluster. You can not enable GitOps on workload clusters as long as you have enabled it on the initial/management cluster. And if you want to manage the deployment of Kubernetes resources on a workload cluster, then you would need to bootstrap Flux against your workload cluster manually, to be able deploying Kubernetes resources to this workload cluster using GitOps

-

Modify the initial cluster generated config (

mgmt-cluster.yaml) as follows: You will notice that the generated config file comes with the following fields with empty values. All you need is to fill them with the values we gathered in the prerequisites page.-

Cluster: controlPlaneConfiguration.endpoint.host: ""

controlPlaneConfiguration: count: 3 endpoint: # Fill this value with the IP address you want to use for the management # cluster control plane endpoint. You will also need a separate one for the # controlplane of each workload cluster you add later. host: "" -

VSphereDatacenterConfig:

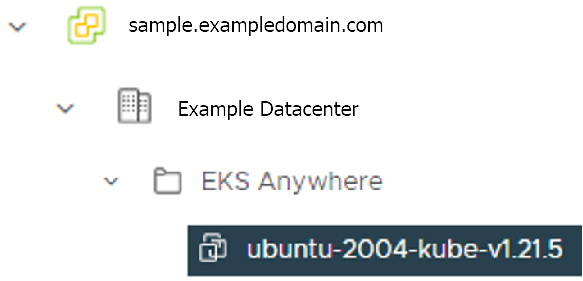

datacenter: "" # Fill it with the vSphere Datacenter Name. Example: "Example Datacenter" insecure: false network: "" # Fill it with VM Network Name. Example: "/Example Datacenter/network/VLAN035" server: "" # Fill it with the vCenter Server Domain Name. Example: "sample.exampledomain.com" thumbprint: "" # Fill it with the thumprint of your vCenter server. Example: "BF:B5:D4:C5:72:E4:04:40:F7:22:99:05:12:F5:0B:0E:D7:A6:35:36" -

VSphereMachineConfig sections:

datastore: "" # Fill in the vSphere datastore name: Example "/Example Datacenter/datastore/LabStorage" diskGiB: 25 # Fill in the folder name that the VMs of the cluster will be organized under. # You will have a separate folder for the management cluster and each cluster you are adding. folder: "" # Fill in the foler name Example: /Example Datacenter/vm/EKS Anywhere/mgmt-cluster memoryMiB: 8192 numCPUs: 2 osFamily: ubuntu # You can set it to botllerocket or ubuntu resourcePool: "" # Fill in the vSphere Resource pool. Example: /Example Datacenter/host/Lab/Resources- Remove the

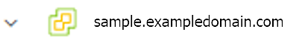

usersproperty, and it will be genrated during the cluster creation automatically. It will set the username tocapvif osFamily=ubuntu, andec2-userif osFamily=botllerocket which is the default option. It will also generate an SSH Key pair, that you can use later to connect to your cluster VMs. - Add template property if you chose to import the EKS-A VM OVA template, and set it to the VM template you imported. Check the vSphere preparation steps

template: /Example Datacenter/vm/EKS Anywhere/ubuntu-2004-kube-v1.21.2 - Remove the

Refer to vsphere configuration for more information on the configuring that can be used for a vSphere provider.

-

-

Set Credential Environment Variables

Before you create the initial/management cluster, you will need to set and export these environment variables for your vSphere user name and password. Make sure you use single quotes around the values so that your shell does not interpret the values

# vCenter User Credentials export GOVC_URL='[vCenter Server Domain Name]' # Example: https://sample.exampledomain.com export GOVC_USERNAME='[vSphere user name]' # Example: USER1@exampledomain export GOVC_PASSWORD='[vSphere password]' export GOVC_INSECURE=true export EKSA_VSPHERE_USERNAME='[vSphere user name]' # Example: USER1@exampledomain export EKSA_VSPHERE_PASSWORD='[vSphere password]' -

Set License Environment Variable

If you are creating a licensed cluster, set and export the license variable (see License cluster if you are licensing an existing cluster):

export EKSA_LICENSE='my-license-here' -

Now you are ready to create a cluster with the basic stettings.

Important

If you plan to enable other compnents such as, GitOps, oidc, IAM for Pods, etc, Skip creating the cluster now and go ahead adding the configuration for those components to your generated config file first. Or you would need to receate the cluster again as mentioned above.After you have finish adding all the configuration needed to your configuration file the

mgmt-cluster.yamland set your credential environment variables, you are ready to create the cluster. Run the create command with the option -v 9 to get the highest level of verbosity, in case you want to troubleshoot any issue happened during the creation of the cluster. You may need also to output it to a file, so you can look at it later.eksctl anywhere create cluster -f $MGMT_CLUSTER_NAME.yaml \ -v 9 > $MGMT_CLUSTER_NAME-$(date "+%Y%m%d%H%M").log 2>&1 -

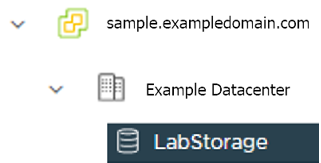

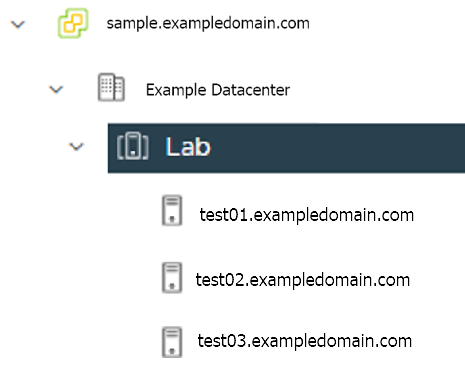

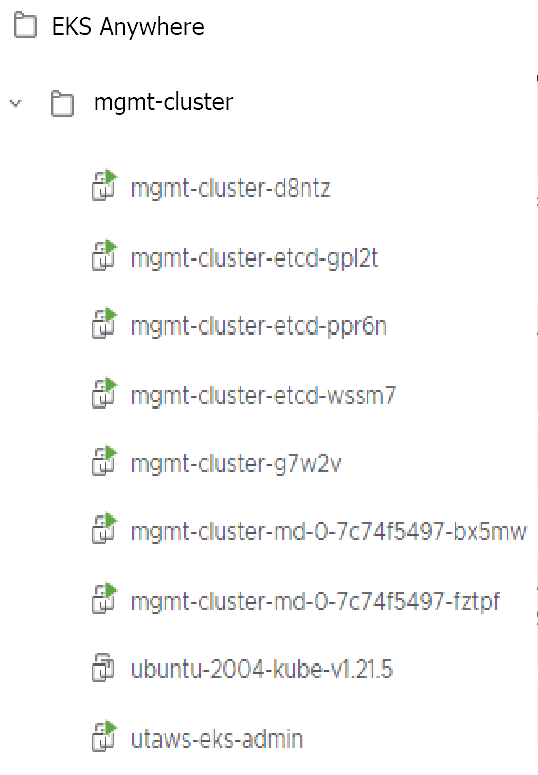

With the completion of the above steps, the management EKS Anywhere cluster is created on the configured vSphere environment under a sub-folder of the

EKS Anywherefolder. You can see the cluster VMs from the vSphere console as below:

-

Once the cluster is created a folder got created on the admin machine with the cluster name which contains the kubeconfig file and the cluster configuration file used to create the cluster, in addition to the generated SSH key pair that you can use to SSH into the VMs of the cluster.

ls mgmt-cluster/Output

eks-a-id_rsa mgmt-cluster-eks-a-cluster.kubeconfig eks-a-id_rsa.pub mgmt-cluster-eks-a-cluster.yaml -

Now you can use your cluster with the generated

KUBECONFIGfile:export KUBECONFIG=${PWD}/${MGMT_CLUSTER_NAME}/${MGMT_CLUSTER_NAME}-eks-a-cluster.kubeconfig kubectl cluster-infoThe cluster endpoint in the output of this command would be the controlPlaneConfiguration.endpoint.host provided in the mgmt-cluster.yaml config file.

-

Check the cluster nodes:

To check that the cluster completed, list the machines to see the control plane, etcd, and worker nodes:

kubectl get machines -AExample command output

NAMESPACE NAME PROVIDERID PHASE VERSION eksa-system mgmt-b2xyz vsphere:/xxxxx Running v1.21.2-eks-1-21-5 eksa-system mgmt-etcd-r9b42 vsphere:/xxxxx Running eksa-system mgmt-md-8-6xr-rnr vsphere:/xxxxx Running v1.21.2-eks-1-21-5 ...The etcd machine doesn’t show the Kubernetes version because it doesn’t run the kubelet service.

-

Check the initial/management cluster’s CRD:

To ensure you are looking at the initial/management cluster, list the CRD to see that the name of its management cluster is itself:

kubectl get clusters mgmt -o yamlExample command output

... kubernetesVersion: "1.21" managementCluster: name: mgmt workerNodeGroupConfigurations: ...Note

The initial cluster is now ready to deploy workload clusters. However, if you just want to use it to run workloads, you can deploy pod workloads directly on the initial cluster without deploying a separate workload cluster and skip the section on running separate workload clusters.

Create separate workload clusters

Follow these steps if you want to use your initial cluster to create and manage separate workload clusters. All steps listed below should be executed on the same admin machine the management cluster created on.

-

Generate a workload cluster config:

export WORKLOAD_CLUSTER_NAME='w01-cluster' export MGMT_CLUSTER_NAME='mgmt-cluster' eksctl anywhere generate clusterconfig $WORKLOAD_CLUSTER_NAME \ --provider vsphere > $WORKLOAD_CLUSTER_NAME.yamlThe command above creates a file named w01-cluster.yaml with similar contents to the mgmt.cluster.yaml file that was generated for the management cluster in the previous section. It will be generated in the path where it is executed.

Same key considerations and configuration parameters apply to workload cluster as well, that were mentioned above with the initial cluster.

-

Refer to the initial config described earlier for the required and optional settings. The main differences are that you must have a new cluster name and cannot use the same vSphere resources.

-

Modify the generated workload cluster config parameters same way you did in the generated configuration file of the management cluster. The only differences are with the following fileds:

-

controlPlaneConfiguration.endpoint.host: That you will use a different IP address for the Cluster filed

controlPlaneConfiguration.endpoint.hostfor each workload cluster as with the initial cluster. Notice here that you use a different IP address from this one that was used with the management cluster. -

managementCluster.name: By default the value of this field is the same as the cluster name, when you generate the configuration file. But because we want this workload cluster we are adding, to managed by the management cluster, then you need to change that to the management cluster name.

managementCluster: name: mgmt-cluster # the name of the initial/management cluster -

VSphereMachineConfig.folder It’s recommended to have a separate folder path for each cluster you add for organization purposes.

folder: /Example Datacenter/vm/EKS Anywhere/w01-cluster

Other than that all other parameters will be configured the same way.

-

-

Create a workload cluster

Important

If you plan to enable other compnents such as oidc, IAM for Pods, etc, skip creating the cluster now and go ahead adding the configuration for those components to your generated config file first. Or you would need to receate the cluster again. If GitOps have been enabled on the initial/management cluster, you would not have the option to enable GitOps on the workload cluster, as the goal of using GitOps is to centrally manage all of your clusters.To create a new workload cluster from your management cluster run this command, identifying:

- The workload cluster yaml file

- The initial cluster’s credentials (this causes the workload cluster to be managed from the management cluster)

eksctl anywhere create cluster \ -f $WORKLOAD_CLUSTER_NAME.yaml \ --kubeconfig $MGMT_CLUSTER_NAME/$MGMT_CLUSTER_NAME-eks-a-cluster.kubeconfig \ -v 9 > $WORKLOAD_CLUSTER_NAME-$(date "+%Y%m%d%H%M").log 2>&1As noted earlier, adding the

--kubeconfigoption tellseksctlto use the management cluster identified by that kubeconfig file to create a different workload cluster. -

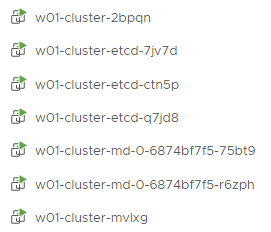

With the completion of the above steps, the management EKS Anywhere cluster is created on the configured vSphere environment under a sub-folder of the

EKS Anywherefolder. You can see the cluster VMs from the vSphere console as below:

-

Once the cluster is created a folder got created on the admin machine with the cluster name which contains the kubeconfig file and the cluster configuration file used to create the cluster, in addition to the generated SSH key pair that you can use to SSH into the VMs of the cluster.

ls w01-cluster/Output

eks-a-id_rsa w01-cluster-eks-a-cluster.kubeconfig eks-a-id_rsa.pub w01-cluster-eks-a-cluster.yaml -

You can list the workload clusters managed by the management cluster.

export KUBECONFIG=${PWD}/${MGMT_CLUSTER_NAME}/${MGMT_CLUSTER_NAME}-eks-a-cluster.kubeconfig kubectl get clusters -

Check the workload cluster:

You can now use the workload cluster as you would any Kubernetes cluster. Change your credentials to point to the kubconfig file of the new workload cluster, then get the cluster info

export KUBECONFIG=${PWD}/${WORKLOAD_CLUSTER_NAME}/${WORKLOAD_CLUSTER_NAME}-eks-a-cluster.kubeconfig kubectl cluster-infoThe cluster endpoint in the output of this command should be the controlPlaneConfiguration.endpoint.host provided in the w01-cluster.yaml config file.

-

To verify that the expected number of cluster worker nodes are up and running, use the kubectl command to show that nodes are Ready.

kubectl get nodes -

Test deploying an application with:

kubectl apply -f "https://anywhere.eks.amazonaws.com/manifests/hello-eks-a.yaml"Verify the test application in the deploy test application section .

-

Add more workload clusters:

To add more workload clusters, go through the same steps for creating the initial workload, copying the config file to a new name (such as

w01-cluster.yaml), modifying resource names, and running the create cluster command again.

See the Cluster management section with more information on common operational tasks like scaling and deleting the cluster.

3 - Packages

This chapter walks through the following:

- Harbor use cases

3.1 - Harbor use cases

Important

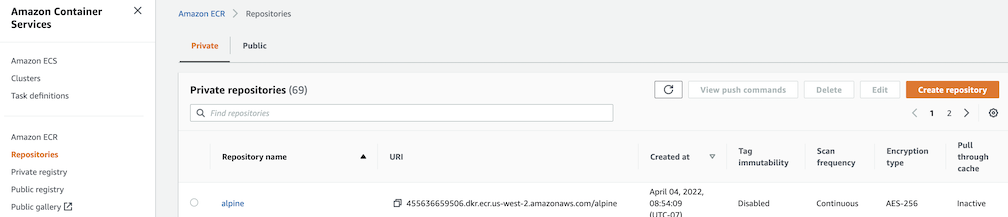

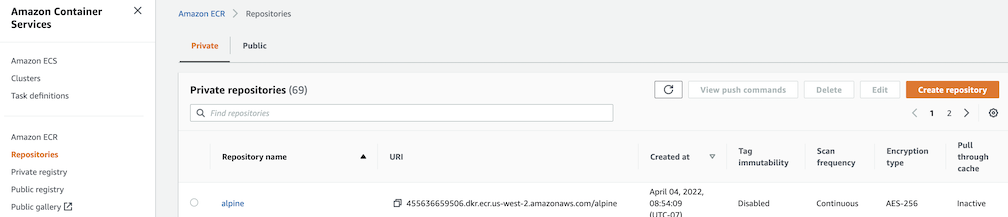

To install Harbor package, please follow the installation guide.Proxy a public Amazon Elastic Container Registry (ECR) repository

This use case is to use Harbor to proxy and cache images from a public ECR repository, which helps limit the amount of requests made to a public ECR repository, avoiding consuming too much bandwidth or being throttled by the registry server.

-

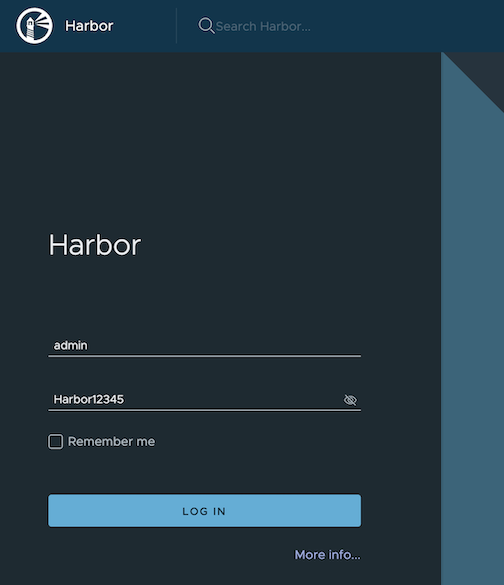

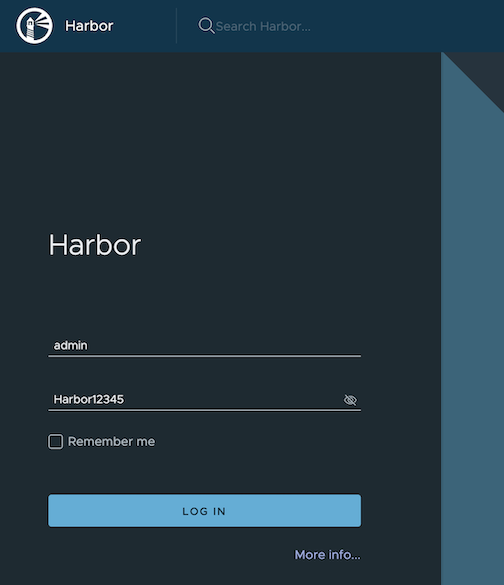

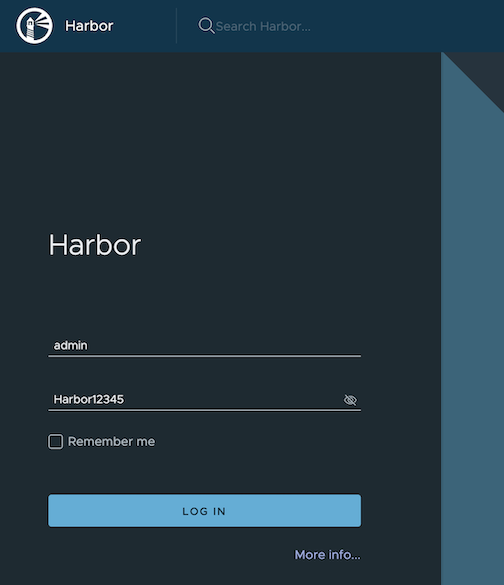

Login

Log in to the Harbor web portal with the default credential as shown below

admin Harbor12345

-

Create a registry proxy

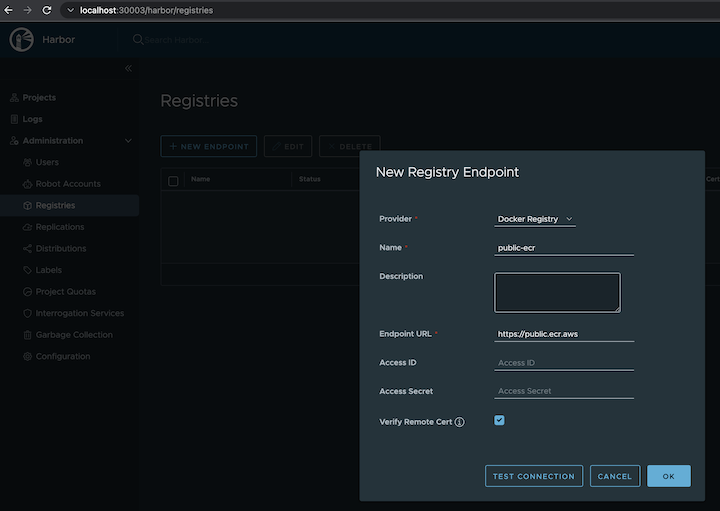

Navigate to

Registrieson the left panel, and then click onNEW ENDPOINTbutton. ChooseDocker Registryas the Provider, and enterpublic-ecras the Name, and enterhttps://public.ecr.aws/as the Endpoint URL. Save it by clicking on OK.

-

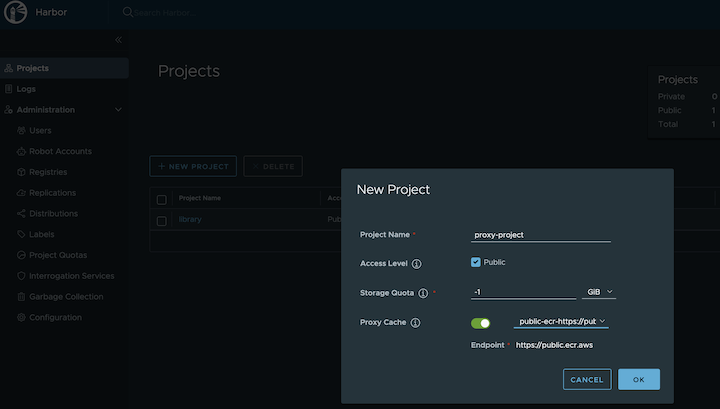

Create a proxy project

Navigate to

Projectson the left panel and click on theNEW PROJECTbutton. Enterproxy-projectas the Project Name, checkPublic access level, and turn on Proxy Cache and choosepublic-ecrfrom the pull-down list. Save the configuration by clicking on OK.

-

Pull images

Note

harbor.eksa.demo:30003should be replaced with whateverexternalURLis set to in the Harbor package YAML file.

docker pull harbor.eksa.demo:30003/proxy-project/cloudwatch-agent/cloudwatch-agent:latest

Proxy a private Amazon Elastic Container Registry (ECR) repository

This use case is to use Harbor to proxy and cache images from a private ECR repository, which helps limit the amount of requests made to a private ECR repository, avoiding consuming too much bandwidth or being throttled by the registry server.

-

Login

Log in to the Harbor web portal with the default credential as shown below

admin Harbor12345

-

Create a registry proxy

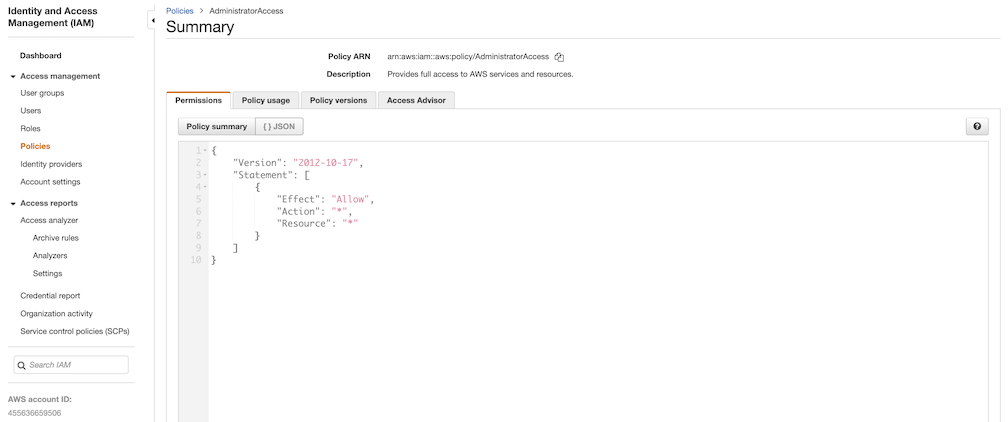

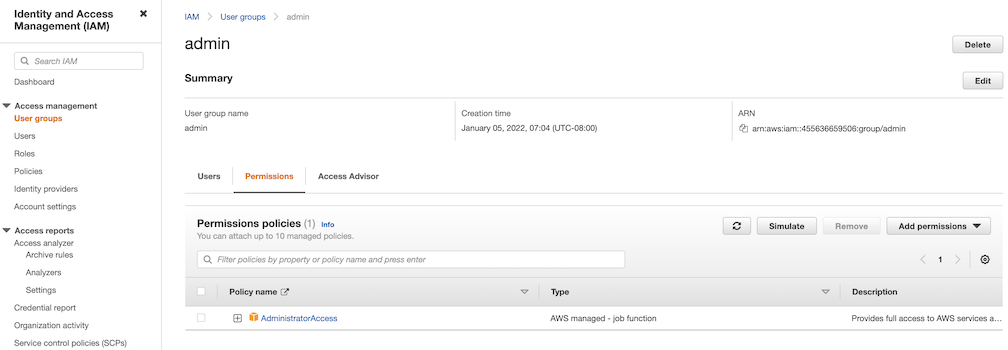

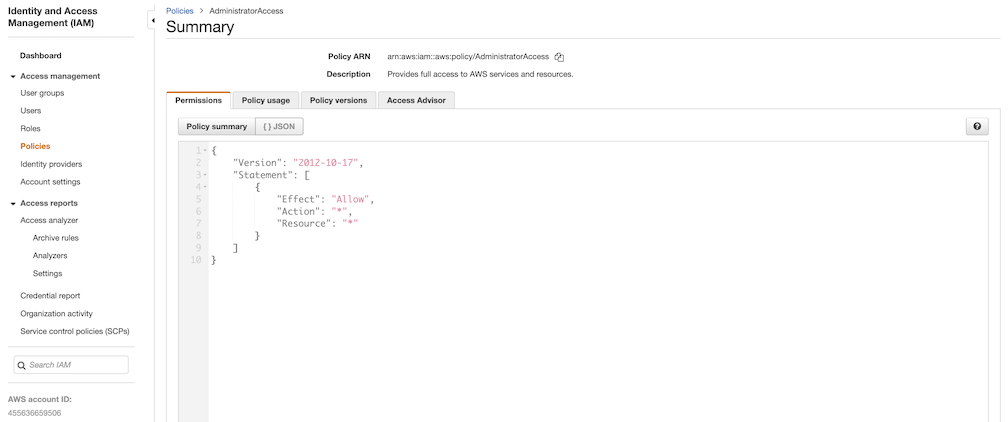

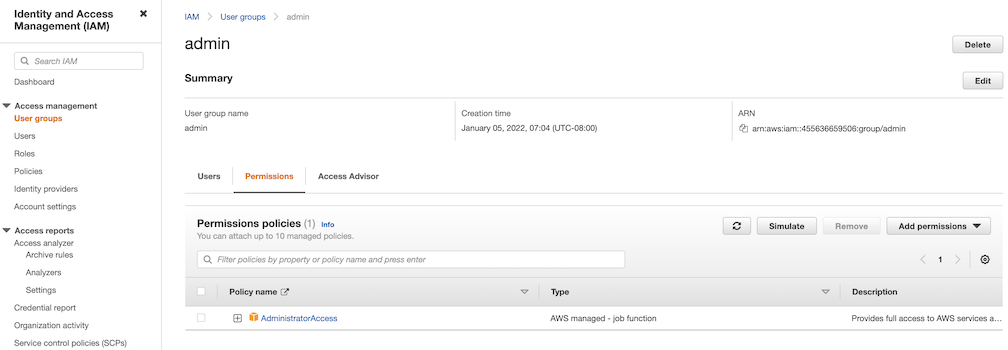

In order for Harbor to proxy a remote private ECR registry, an IAM credential with necessary permissions need to be created. Usually, it follows three steps:

-

Policy

This is where you specify all necessary permissions. Please refer to private repository policies , IAM permissions for pushing an image and ECR policy examples to figure out the minimal set of required permissions.

For simplicity, the build-in policy AdministratorAccess is used here.

-

User group

This is an easy way to manage a pool of users who share the same set of permissions by attaching the policy to the group.

-

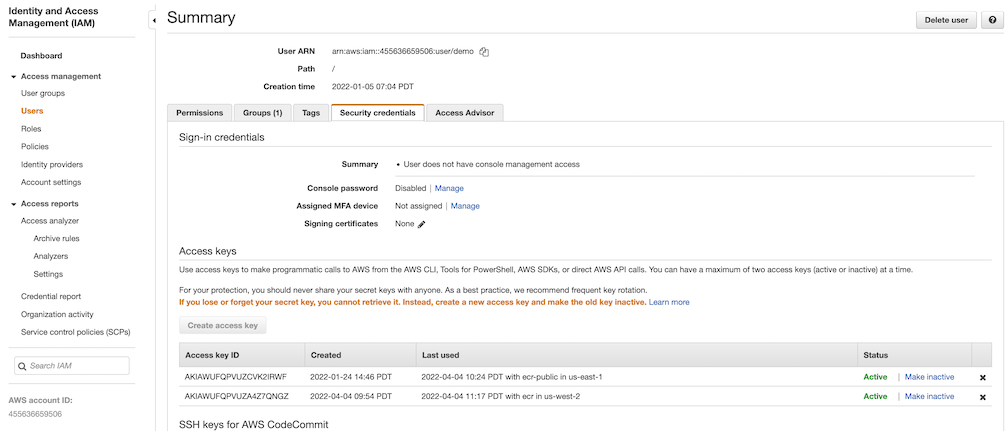

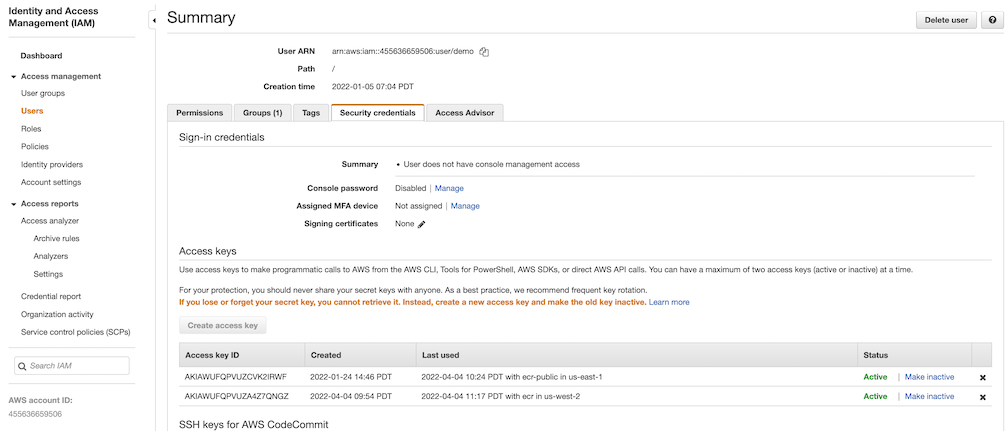

User

Create a user and add it to the user group. In addition, please navigate to Security credentials to generate an access key. Access keys consists of two parts: an access key ID and a secret access key. Please save both as they are used in the next step.

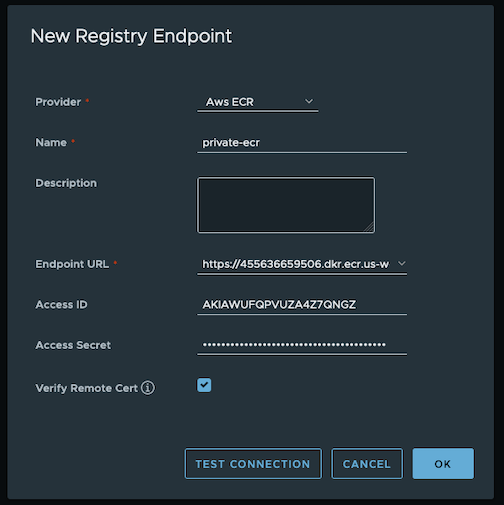

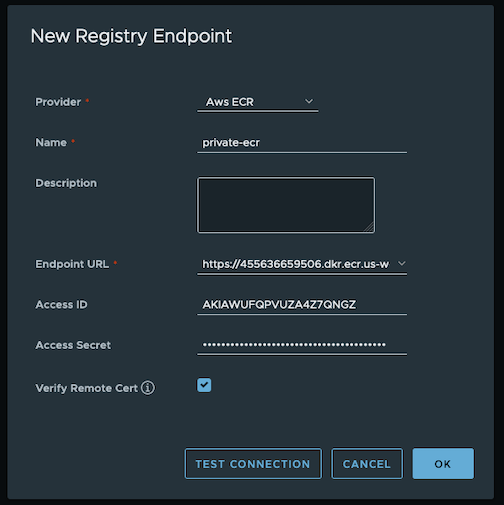

Navigate to

Registrieson the left panel, and then click onNEW ENDPOINTbutton. ChooseAws ECRas Provider, and enterprivate-ecras Name,https://[ACCOUNT NUMBER].dkr.ecr.us-west-2.amazonaws.com/as Endpoint URL, use the access key ID part of the generated access key as Access ID, and use the secret access key part of the generated access key as Access Secret. Save it by click on OK.

-

-

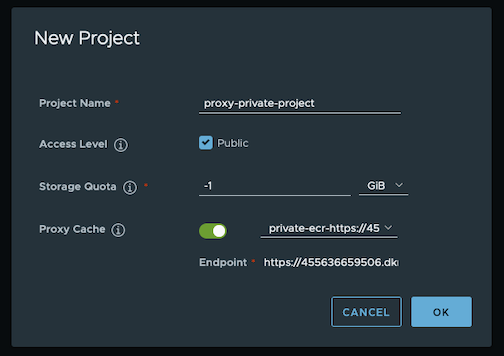

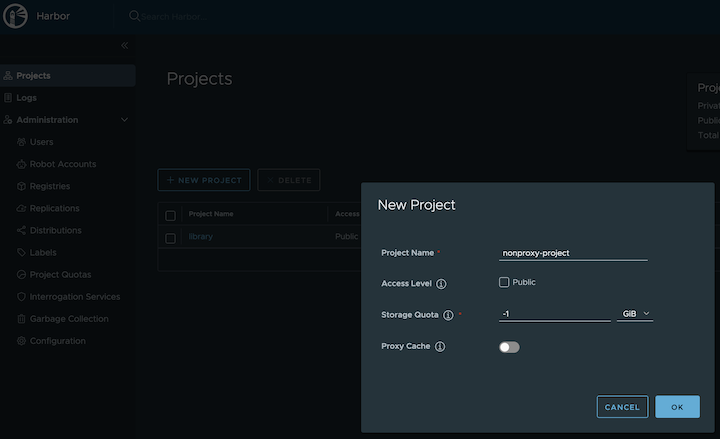

Create a proxy project

Navigate to

Projectson the left panel and click onNEW PROJECTbutton. Enterproxy-private-projectas Project Name, checkPublic access level, and turn on Proxy Cache and chooseprivate-ecrfrom the pull-down list. Save the configuration by clicking on OK.

-

Pull images

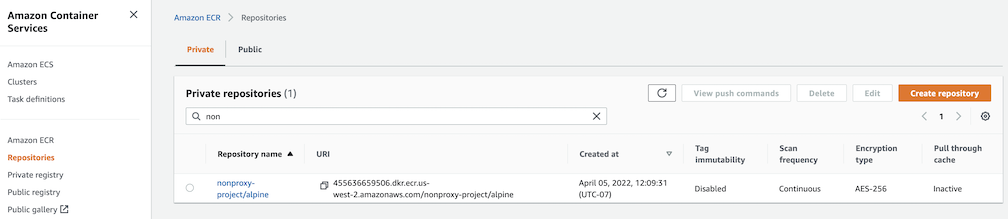

Create a repository in the target private ECR registry

Push an image to the created repository

docker pull alpine docker tag alpine [ACCOUNT NUMBER].dkr.ecr.us-west-2.amazonaws.com/alpine:latest docker push [ACCOUNT NUMBER].dkr.ecr.us-west-2.amazonaws.com/alpine:latestNote

harbor.eksa.demo:30003should be replaced with whateverexternalURLis set to in the Harbor package YAML file.

docker pull harbor.eksa.demo:30003/proxy-private-project/alpine:latest

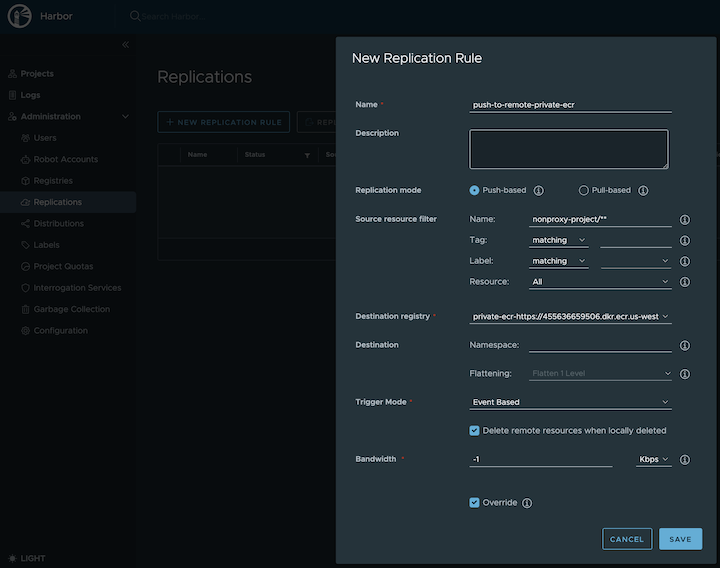

Repository replication from Harbor to a private Amazon Elastic Container Registry (ECR) repository

This use case is to use Harbor to replicate local images and charts to a private ECR repository in push mode. When a replication rule is set, all resources that match the defined filter patterns are replicated to the destination registry when the triggering condition is met.

-

Login

Log in to the Harbor web portal with the default credential as shown below

admin Harbor12345

-

Create a nonproxy project

-

Create a registry proxy

In order for Harbor to proxy a remote private ECR registry, an IAM credential with necessary permissions need to be created. Usually, it follows three steps:

-

Policy

This is where you specify all necessary permissions. Please refer to private repository policies , IAM permissions for pushing an image and ECR policy examples to figure out the minimal set of required permissions.

For simplicity, the build-in policy AdministratorAccess is used here.

-

User group

This is an easy way to manage a pool of users who share the same set of permissions by attaching the policy to the group.

-

User

Create a user and add it to the user group. In addition, please navigate to Security credentials to generate an access key. Access keys consists of two parts: an access key ID and a secret access key. Please save both as they are used in the next step.

Navigate to

Registrieson the left panel, and then click on theNEW ENDPOINTbutton. ChooseAws ECRas the Provider, and enterprivate-ecras the Name,https://[ACCOUNT NUMBER].dkr.ecr.us-west-2.amazonaws.com/as the Endpoint URL, use the access key ID part of the generated access key as Access ID, and use the secret access key part of the generated access key as Access Secret. Save it by clicking on OK.

-

-

Create a replication rule

-

Prepare an image

Note

harbor.eksa.demo:30003should be replaced with whateverexternalURLis set to in the Harbor package YAML file.

docker pull alpine docker tag alpine:latest harbor.eksa.demo:30003/nonproxy-project/alpine:latest -

Authenticate with Harbor with the default credential as shown below

admin Harbor12345Note

harbor.eksa.demo:30003should be replaced with whateverexternalURLis set to in the Harbor package YAML file.

docker logout docker login harbor.eksa.demo:30003 -

Push images

Create a repository in the target private ECR registry

Note

harbor.eksa.demo:30003should be replaced with whateverexternalURLis set to in the Harbor package YAML file.

docker push harbor.eksa.demo:30003/nonproxy-project/alpine:latestThe image should appear in the target ECR repository shortly.